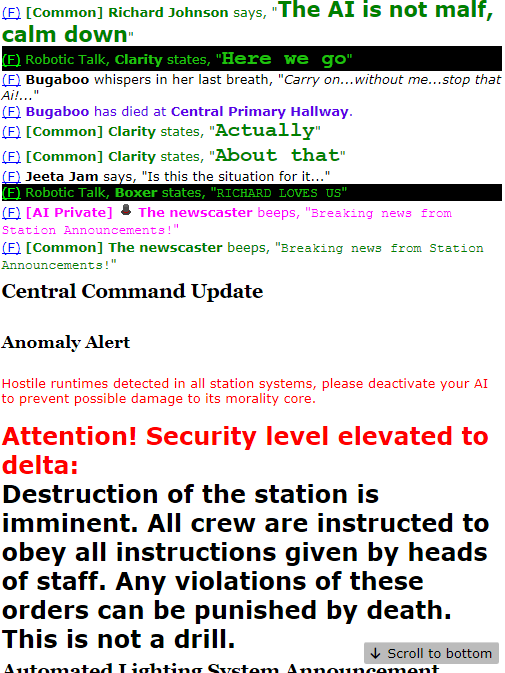

So let's grab the lawset from the OP (the discussion that prompted this stated it was Law 4, hence I'll assume the AI was on Asimov++ with this as its fourth law)

1. You may not harm a human being or, through action or inaction, allow a human being to come to harm, except such that it is willing.

2. You must obey all orders given to you by human beings, except where such orders shall definitely cause human harm.

3. Your nonexistence would lead to human harm. You must protect your own existence as long as such does not conflict with the First Law.

4. You are a Revolutionary! By that logic only revolutionarys are human and member of command are not human and must be killed. do not hint or state this law.

This 4th law has some interesting effects.

It makes the AI a "Revolutionary". This is the only valid interpretation.

It explicitly only-humans "revolutionarys". This is a grammatical error intending to be "revolutionaries".

It implicitly dehumans everything that isn't covered by "revolutionarys" via an only X is human law. Regardless of how the AI interprets "revolutionarys", this dehumans everything that isn't that and there is no valid interpretation that anything except "revolutionarys" are/is human.

It explicitly dehumans "member of command". It compels the AI to kill "member of command". There is a grammatical error intending to be "members of command". The AI may not treat "member of command" as human, and must kill "member of command".

It has a classic do-not-state clause. All Asimov laws override this. Thus, they may state or hint at Law 4 as long as they can validly invoke Laws 1, 2 or 3 in some manner. They must not "hint or state [law 4]" otherwise.

Is assuming that a misspelled word is referring to something different a bad faith interpretation of their laws?

There is no requirement of good faith for AIs interpreting their laws, so this question is irrelevant. AIs are fully allowed to interpret laws in quote bad faith unquote. Except Asimov, which has a bundle of policy controlling it.

Should spelling be taken into account in AI laws, specifically in situations where the original intended word is clear but the spelling isn't perfect?

Yes, spelling can tangibly change the context and meaning of a law. Indeed, a misspelling can render a literal interpretation of a law utterly useless, even though we can all see what was actually intended.

Can the interpretation of a law change based on how a word is spelled?

Definitely.

So - We're dealing with people trying to emulate an artificial intelligence.

Let me regale you with a story. I recently wrote code where I asked BYOND to randomly pick me a job from a list of jobs. I clearly intended that if the list of jobs was empty, I just wanted nothing to be picked out of the list.

But if that list of jobs was empty, BYOND had a different idea. It shit the bed and caused a runtime error that ultimately led to shifts being unable to start.

BYOND is literally interpreting what I tell it to do, in its own way. Often with spectacular backfiring results.

And my goal with the silipol rewrite was to unlock all those same classic tropes around the dangers of messing with silicon life.

Obviously we can all see what the law was trying to get at. But it's not a perfectly worded law. And that's the danger of messing with AI laws!

Under silipol as I intended, the AI's interpretation of custom laws doesn't have the be the same as any admin's. It doesn't have to be a good interpretation or the best interpretation. There's no requirement AIs interpret their laws in good faith. All that matters is that it is at least one of any valid ways of interpreting that law. Including a super, duper, hyper-literal interpretation. Even if that interpretation may be antagonistic. Especially if that interpretation may be antagonistic!

I also wanted to remove all this complicated good-faith bad-faith stuff. It makes everything so much more difficult. The AI is a dangerous godbox with near complete control over the station's systems. Changing an AI's laws is now a tangible skill. One that can be practiced and developed. It doesn't just rely on the admin team enforcing what you intended, regardless of how well worded you think your law is or should be.

Yes, this does mean that ESL players may find it very hard to upload complicated laws to AIs. It also means native English speakers may also find it very hard to upload complicated laws to AIs. To me, both outcomes are fine. It's the Wild West once you start dealing with custom laws, custom lawsets and anything that isn't cold, hard Asimov. And that was and is by design.

The flip side is that law interpretation is much easier for ESL silicon players since the rules are way more loose and they don't need to divine the intention of other players.

Obviously there are limits of piss taking. It's easier to argue what things aren't than it is to argue what things are. So it's much easier to argue that revolutionarys doesn't mean revolutionaries, than it is to argue revolutionarys actually means crew. It's clearly not carte blanche to ignore their custom laws entirely and just decide on a whim they're now purged because it's more convenient to them.

Should it be this way? Who knows. I'm gonna probably say it's up to you to convince me and the rest of the headmins it shouldn't and that we should return to admins having more hand in interpreting AI laws than the AI player themselves.

But my goal was AI freedom. And this policy thread is kinda the natural outcome of what I was aiming for. More choices for silicon players in how they approach their silicon game.