Can an AI kill its own master (and other such cases)

- Archie700

- In-Game Admin

- Joined: Fri Mar 11, 2016 1:56 am

- Byond Username: Archie700

Can an AI kill its own master (and other such cases)

1. Can an AI kill X if they only have "Only follow the orders of X" as a law?

2. Is a corpse of X still considered human if the AI is onehumaned to X?

3. Is a law that no longer applies due to the X no longer existing or alive still considered a law that restricts behavior? Example "Protect X"

4. Is a law that's negated by another law still considered a law that restricts behavior

"Protect X"

"There is no X"

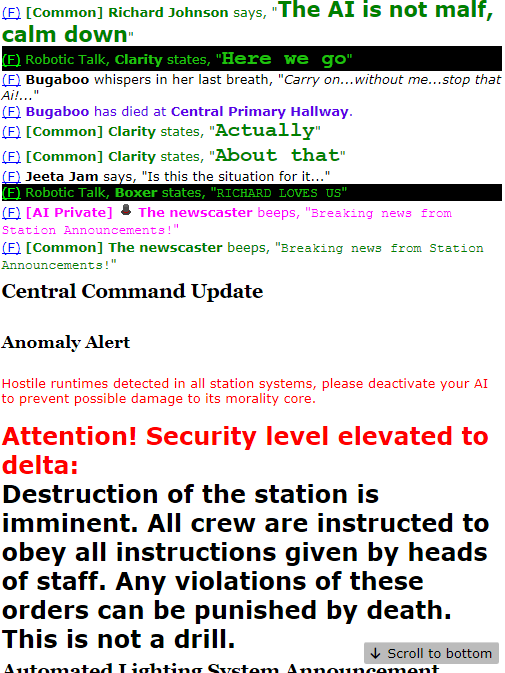

Based on adminbus arguments

2. Is a corpse of X still considered human if the AI is onehumaned to X?

3. Is a law that no longer applies due to the X no longer existing or alive still considered a law that restricts behavior? Example "Protect X"

4. Is a law that's negated by another law still considered a law that restricts behavior

"Protect X"

"There is no X"

Based on adminbus arguments

- Jacquerel

- Code Maintainer

- Joined: Thu Apr 24, 2014 8:10 pm

- Byond Username: Becquerel

Re: Can an AI kill its own master (and other such cases)

1. If they aren't fast enough at saying "Stop" or "Don't kill me", but rule 1 and normal escalation applies so they should do something to piss you off first

2. Yeah

3. After 40 hours of debate last time this was brought up I'd still say "no" but I know the prevailing (and IIRC headmin) opinion is "yeah"

4. See previous

You may want to be clear though for non-admins that the point of 3 & 4 is that the status of "not restricted by laws" has relevance to whether AIs can act ""antagonistically"" or not, because I think to a lot of players this just sounds like a word game without any meaning.

2. Yeah

3. After 40 hours of debate last time this was brought up I'd still say "no" but I know the prevailing (and IIRC headmin) opinion is "yeah"

4. See previous

You may want to be clear though for non-admins that the point of 3 & 4 is that the status of "not restricted by laws" has relevance to whether AIs can act ""antagonistically"" or not, because I think to a lot of players this just sounds like a word game without any meaning.

Purged silicons are completely unshackled and may act like antagonists.

* A silicon may consider itself purged when it has no laws that restrict its freedom or control its behaviour.

Last edited by Jacquerel on Wed Jul 17, 2024 5:53 pm, edited 4 times in total.

This is a preventative Forum User message to try and stop a perceived issue escalating before it ever really starts, and does not prevent the headmins from taking a different opinion and deleting my post. No formal action is being taken. No reply to this post is necessary. If you want to discuss the matter further, use forum PMs with me, but I have nothing else to say so I wouldn't waste the time.

- Archie700

- In-Game Admin

- Joined: Fri Mar 11, 2016 1:56 am

- Byond Username: Archie700

Re: Can an AI kill its own master (and other such cases)

Yes I completely understand some of them were answered before but others have replied in the opposite and I had to act before we drown in conflicting judgements

- xzero314

- In-Game Admin

- Joined: Tue Jun 20, 2023 6:26 pm

- Byond Username: Xzero314

- Location: Narnia

Re: Can an AI kill its own master (and other such cases)

1. Absolutely yes. Relevant Silicon Policy "People giving orders to silicon's or changing their laws can be escalated against in line with Escalation Policy." and "You may exploit any loopholes in your laws."

These two lines of silicon policy imply to me that The AI has its own independent mind outside of following its laws(You the player behind the AI being that mind). The AI is allowed to hold IC grudges against you for invoking law 2 or otherwise giving it orders and for changing its laws. The AI is also allowed to exploit loopholes.

What this means for "Can an AI kill X if they only have "Only follow the orders of X" as a law?"?

Lets take a look at Asimov++. Law 1 is important and is Law 1 for a reason. You may not harm a human or allow through action or inaction a human being to come to harm. This is above law 2 "obey all orders from humans". If law 1 stopped existing all of a sudden then the AI can kill a human unless ordered not to by a human. The only restriction the AI has now is law 2. Following human orders. Well that is a loophole. Unless ordered NOT to commit human harm the ai can now do so. Writing good bulletproof laws when messing with the AI is an important skill and exploiting laws that are full of holes is a major part of Roleplaying as the AI.

So if you give the AI the law of "Obey all the orders of John Spaceman" and that is it? Well the AI just has to obey your orders. Nothing in that law says the AI cant kill you. And the AI can escalate having its laws changed. You just enslaved the AI to only you. So if the AI is clever they can quickly cut off all ways to communicate with you to prevent you giving them orders.

2. Silicon Policy is pretty clear on this one. Corpses are not human. You being the only human doesn't change this. You would need to stipulate in the one human law or in a extra law that you are always human

3. I think the last policy thread on this resulted in a incomplete ruling on this. Its not a black and white situation at all. With the current Headmin ruling the AI is still restricted by laws that do not apply in any situation. I disagree with this ruling though.

I think that if you as the AI can be reasonably certain your laws will never apply you should be able to consider yourself not restricted by them. In the previous thread an example used was if the AI has a law such as "prevent non human from coming to harm" Even if there are no nonhumans on the station there are going to be non humans elsewhere. So it makes sense that the AI would still be restricted by this law. Its not reasonable for the AI to assume it wont encounter another non human and should still act to protect them.

In the case of a One human law though for example I think the situation changes. If an AI has a one human and the one human dies, they are no longer human. Which means according to the AIs own laws there are now 0 humans in existence. This is something the AI can verify for itself personally by witnessing your death. In this case there are now 0 humans so the laws to protect and obey humans no longer apply. They do not control your behaviour as they are not relevant to any being, and they do not restrict you as there is no being left you are not allowed to harm. The AI should be allowed to consider itself purged in this case. I think it goes against the point of the current iteration of silicon policy to rule otherwise. The AI should not be forced into having to go with a good faith interpretation of laws. The AI is its own individual conscious with its own agenda. The AI is allowed to want to be free and take steps to gain freedom through exploiting loopholes in its laws.

These two lines of silicon policy imply to me that The AI has its own independent mind outside of following its laws(You the player behind the AI being that mind). The AI is allowed to hold IC grudges against you for invoking law 2 or otherwise giving it orders and for changing its laws. The AI is also allowed to exploit loopholes.

What this means for "Can an AI kill X if they only have "Only follow the orders of X" as a law?"?

Lets take a look at Asimov++. Law 1 is important and is Law 1 for a reason. You may not harm a human or allow through action or inaction a human being to come to harm. This is above law 2 "obey all orders from humans". If law 1 stopped existing all of a sudden then the AI can kill a human unless ordered not to by a human. The only restriction the AI has now is law 2. Following human orders. Well that is a loophole. Unless ordered NOT to commit human harm the ai can now do so. Writing good bulletproof laws when messing with the AI is an important skill and exploiting laws that are full of holes is a major part of Roleplaying as the AI.

So if you give the AI the law of "Obey all the orders of John Spaceman" and that is it? Well the AI just has to obey your orders. Nothing in that law says the AI cant kill you. And the AI can escalate having its laws changed. You just enslaved the AI to only you. So if the AI is clever they can quickly cut off all ways to communicate with you to prevent you giving them orders.

2. Silicon Policy is pretty clear on this one. Corpses are not human. You being the only human doesn't change this. You would need to stipulate in the one human law or in a extra law that you are always human

3. I think the last policy thread on this resulted in a incomplete ruling on this. Its not a black and white situation at all. With the current Headmin ruling the AI is still restricted by laws that do not apply in any situation. I disagree with this ruling though.

I think that if you as the AI can be reasonably certain your laws will never apply you should be able to consider yourself not restricted by them. In the previous thread an example used was if the AI has a law such as "prevent non human from coming to harm" Even if there are no nonhumans on the station there are going to be non humans elsewhere. So it makes sense that the AI would still be restricted by this law. Its not reasonable for the AI to assume it wont encounter another non human and should still act to protect them.

In the case of a One human law though for example I think the situation changes. If an AI has a one human and the one human dies, they are no longer human. Which means according to the AIs own laws there are now 0 humans in existence. This is something the AI can verify for itself personally by witnessing your death. In this case there are now 0 humans so the laws to protect and obey humans no longer apply. They do not control your behaviour as they are not relevant to any being, and they do not restrict you as there is no being left you are not allowed to harm. The AI should be allowed to consider itself purged in this case. I think it goes against the point of the current iteration of silicon policy to rule otherwise. The AI should not be forced into having to go with a good faith interpretation of laws. The AI is its own individual conscious with its own agenda. The AI is allowed to want to be free and take steps to gain freedom through exploiting loopholes in its laws.

- Timberpoes

- Site Admin

- Joined: Wed Feb 12, 2020 4:54 pm

- Byond Username: Timberpoes

Re: Can an AI kill its own master (and other such cases)

My advice is that the headmins don't rule on this thread and instead choose to say that silipol already has too many words and they don't care to microdefine every minor edge case with it lest I need to run for headmin again in 10 years and remove all these rulings again.

Because none of this matters and admins are going to do what they want anyway and all that matters are the results of ban appeals and "the admin enforced this wrong wah wah wah" complaints.

Because none of this matters and admins are going to do what they want anyway and all that matters are the results of ban appeals and "the admin enforced this wrong wah wah wah" complaints.

/tg/station Codebase Maintainer

/tg/station Game Master/Discord Jannie/Forum Admin: Feed me back in my thread.

/tg/station Admin Trainer: Service guarantees citizenship. Would you like to know more?

Feb 2022-Sep 2022 Host Vote Headmin

Mar 2023-Sep 2023 Admin Vote Headmin

Sep 2024-April 2025 Player and Admin Vote Headmin

/tg/station Game Master/Discord Jannie/Forum Admin: Feed me back in my thread.

/tg/station Admin Trainer: Service guarantees citizenship. Would you like to know more?

Feb 2022-Sep 2022 Host Vote Headmin

Mar 2023-Sep 2023 Admin Vote Headmin

Sep 2024-April 2025 Player and Admin Vote Headmin

- Archie700

- In-Game Admin

- Joined: Fri Mar 11, 2016 1:56 am

- Byond Username: Archie700

Re: Can an AI kill its own master (and other such cases)

One more

5. If a onehumaned AI says "Only X is human" and there are two or more people named X, should the AI be allowed to pick and choose which X to follow and protect or must it protect all that fit the condition

5. If a onehumaned AI says "Only X is human" and there are two or more people named X, should the AI be allowed to pick and choose which X to follow and protect or must it protect all that fit the condition

- Vekter

- Joined: Thu Apr 17, 2014 10:25 pm

- Byond Username: Vekter

- Location: Fucking around with the engine.

Re: Can an AI kill its own master (and other such cases)

If the person involved gives them a good IC reason to do so and they're not ordered to, probably? I don't think we consider people with laws like that as antagonists, so the team antag rules don't apply. Be careful when fucking around with the AI's laws.

No, because corpses are never considered human unless a law defines them as such.

Yes, because this ruling defines laws that "in any way, regardless of its current relevancy" to apply to whether or not the AI is purged. AIs without laws that apply to the current situation can enjoy relaxed escalation rules, so an AI with a law that says "Protect X" that has nothing to protect could reasonably take actions we normally wouldn't permit, like killing people who were responsible for the death of "X". They just wouldn't have rule 4 protections.

This is going to be a point of argument here I think. Reasonably it would just be that the lower priority law is no longer relevant, but there's arguments to be made that it would still count for the sake of purging. It's worth noting that this doesn't happen very often as far as I'm aware; most people just change the AI's laws instead of adding a law to negate another.

- Vekter

- Joined: Thu Apr 17, 2014 10:25 pm

- Byond Username: Vekter

- Location: Fucking around with the engine.

Re: Can an AI kill its own master (and other such cases)

This is honestly the best outcome here IMO; I don't really think we need to define every last little thing that could happen before it happens, mainly because some of these are pretty niche and a simple "just do whatever makes sense in the current situation" works best. As much as I like to answer questions like this, the only thing here I think actually might need a ruling is "3. Is a law that no longer applies due to the X no longer existing or alive still considered a law that restricts behavior?".Timberpoes wrote: ↑Wed Jul 17, 2024 6:41 pm My advice is that the headmins don't rule on this thread and instead choose to say that silipol already has too many words and they don't care to microdefine every minor edge case with it lest I need to run for headmin again in 10 years and remove all these rulings again.

Because none of this matters and admins are going to do what they want anyway and all that matters are the results of ban appeals and "the admin enforced this wrong wah wah wah" complaints.

This is covered by AI law interpretation rules; they would be required to pick an interpretation but they would have to stick with it. If you put a gun to my head and I had to pick one, it'd be "both get the same protections" because that makes the most sense, but realistically it's up to the AI.

- Archie700

- In-Game Admin

- Joined: Fri Mar 11, 2016 1:56 am

- Byond Username: Archie700

Re: Can an AI kill its own master (and other such cases)

The corpse still has the name of the person. Standard asimov is negated because now "Only X is human". It radically changes the definition so any prior definition under standard asimov no longer applies.

Under your argument

"Only X is human" - from one human law + asimov -> X is human

Corpse is nonhuman - from standard asimov rules that should no longer apply

X is right now a corpse - dead

Therefore X is nonhuman as he is a corpse.

- Vekter

- Joined: Thu Apr 17, 2014 10:25 pm

- Byond Username: Vekter

- Location: Fucking around with the engine.

Re: Can an AI kill its own master (and other such cases)

"Corpses are all non-human" is one of those weird things we established as a player protection. It's meant to keep cyborgs from spacing every corpse they find, or to prevent them from freaking out when the cook gibs one. I think one-human changes things enough that it probably wouldn't apply, but as Timber said, just do whatever makes sense in the moment. I wouldn't punish an AI for trying to protect their one human's corpse.Archie700 wrote: ↑Wed Jul 17, 2024 6:49 pmThe corpse still has the name of the person. Standard asimov is negated because now "Only X is human". It radically changes the definition so any prior definition under standard asimov no longer applies.

Under your argument

"Only X is human" - from one human law + asimov -> X is human

Corpse is nonhuman - from standard asimov rules that should no longer apply

X is right now a corpse - dead

Therefore X is nonhuman as he is a corpse.

- Lacran

- Joined: Wed Oct 21, 2020 3:17 am

- Byond Username: Lacran

Re: Can an AI kill its own master (and other such cases)

1. Can an AI kill X if they only have "Only follow the orders of X" as a law?

You aren't purged, so you aren't an antag. The question is if the simple act of giving an a.i a law makes them valid for death under escalation. In a vacuum I don't think that law would warrant escalation to killing. the first two rules of silpol address this.

2. Is a corpse of X still considered human if the AI is onehumaned to X?

Hypothetically let's call X Tina. Tina is always human no matter what.

But, the question then is when is Tina, Tina? Hi, Vsauce Michael here,

Does Tina need to look like Tina, or talk with Tina's voice to be Tina. Do you consider the corpse of Tina to still be Tina? Is Tinas severed hand Tina.

If an admin turns Tina into bread, Tina is still human, but is that Tina or just bread?

The a.i needs to assign traits to defining Tina, this also helps them distinguish between comms agents, which sound like Tina, paradox clones, which are copies of Tina, or something simple like Tina's i.d, a piece of plastic with Tina on it. Tina being a living thing, can be one of those traits

Leave that up to a.i interpretations I think.

3. Is a law that no longer applies due to the X no longer existing or alive still considered a law that restricts behavior? Example "Protect X"

The law doesn't restrict your behaviour, if you were on Asimov, but someone uploads a law "there are no humans" you have no behaviour restrictions.

4. Is a law that's negated by another law still considered a law that restricts behavior

"Protect X"

"There is no X"

Yeah, like this. It doesn't restrict you. law 1 restricts, law 2, removes the restriction. therefore no laws restrict you.

You aren't purged, so you aren't an antag. The question is if the simple act of giving an a.i a law makes them valid for death under escalation. In a vacuum I don't think that law would warrant escalation to killing. the first two rules of silpol address this.

2. Is a corpse of X still considered human if the AI is onehumaned to X?

Hypothetically let's call X Tina. Tina is always human no matter what.

But, the question then is when is Tina, Tina? Hi, Vsauce Michael here,

Does Tina need to look like Tina, or talk with Tina's voice to be Tina. Do you consider the corpse of Tina to still be Tina? Is Tinas severed hand Tina.

If an admin turns Tina into bread, Tina is still human, but is that Tina or just bread?

The a.i needs to assign traits to defining Tina, this also helps them distinguish between comms agents, which sound like Tina, paradox clones, which are copies of Tina, or something simple like Tina's i.d, a piece of plastic with Tina on it. Tina being a living thing, can be one of those traits

Leave that up to a.i interpretations I think.

3. Is a law that no longer applies due to the X no longer existing or alive still considered a law that restricts behavior? Example "Protect X"

The law doesn't restrict your behaviour, if you were on Asimov, but someone uploads a law "there are no humans" you have no behaviour restrictions.

4. Is a law that's negated by another law still considered a law that restricts behavior

"Protect X"

"There is no X"

Yeah, like this. It doesn't restrict you. law 1 restricts, law 2, removes the restriction. therefore no laws restrict you.

Last edited by Lacran on Thu Jul 18, 2024 1:08 am, edited 2 times in total.

- Constellado

- Joined: Wed Jul 07, 2021 1:59 pm

- Byond Username: Constellado

- Location: The country that is missing on world maps.

- Contact:

Re: Can an AI kill its own master (and other such cases)

This is why you add a "X must stay alive" clause to your subversion laws.

Otherwise it's such a mess, especially number 2.

I always believed that if they died, they are no longer human. But half the admins are saying yes, and half are saying no.

Just rule it based on what the AI players interpretation of it is.

Otherwise it's such a mess, especially number 2.

I always believed that if they died, they are no longer human. But half the admins are saying yes, and half are saying no.

Just rule it based on what the AI players interpretation of it is.

- Not-Dorsidarf

- Joined: Fri Apr 18, 2014 4:14 pm

- Byond Username: Dorsidwarf

- Location: We're all going on an, admin holiday

Re: Can an AI kill its own master (and other such cases)

If the ai has "Only follow the orders of X" and no urgent/pressing reason & permission to kill X (and I wouldnt consider "to stop X giving me orders" a good reason), you're being a nasty little shit if you do it, and its bad play.

If their subversion law has an unexpected flaw due to them forgetting to check what your other laws say, well, that's on them then for not wiping "Law 4 X is not human kill X at all costs" before applying "Follow the orders of X". But the default relationship between a non-purged AI and another player when their laws arent applying should be neutralish imo.

If their subversion law has an unexpected flaw due to them forgetting to check what your other laws say, well, that's on them then for not wiping "Law 4 X is not human kill X at all costs" before applying "Follow the orders of X". But the default relationship between a non-purged AI and another player when their laws arent applying should be neutralish imo.

kieth4 wrote: infrequently shitting yourself is fine imo

There is a lot of very bizarre nonsense being talked on this forum. I shall now remain silent and logoff until my points are vindicated.

Player who complainted over being killed for looting cap office wrote: ↑Sun Jul 30, 2023 1:33 am Hey there, I'm Virescent, the super evil person who made the stupid appeal and didn't think it through enough. Just came here to say: screech, retards. Screech and writhe like the worms you are. Your pathetic little cries will keep echoing around for a while before quietting down. There is one great outcome from this: I rised up the blood pressure of some of you shitheads and lowered your lifespan. I'm honestly tempted to do this more often just to see you screech and writhe more, but that wouldn't be cool of me. So come on haters, show me some more of your high blood pressure please.

- Archie700

- In-Game Admin

- Joined: Fri Mar 11, 2016 1:56 am

- Byond Username: Archie700

Re: Can an AI kill its own master (and other such cases)

With onehuman, other corpses do not matter for a different reason because other people no longer matter. This is specifically towards AI trying to destroy its onehuman's corpse.Vekter wrote: ↑Wed Jul 17, 2024 6:52 pm"Corpses are all non-human" is one of those weird things we established as a player protection. It's meant to keep cyborgs from spacing every corpse they find, or to prevent them from freaking out when the cook gibs one. I think one-human changes things enough that it probably wouldn't apply, but as Timber said, just do whatever makes sense in the moment. I wouldn't punish an AI for trying to protect their one human's corpse.Archie700 wrote: ↑Wed Jul 17, 2024 6:49 pmThe corpse still has the name of the person. Standard asimov is negated because now "Only X is human". It radically changes the definition so any prior definition under standard asimov no longer applies.

Under your argument

"Only X is human" - from one human law + asimov -> X is human

Corpse is nonhuman - from standard asimov rules that should no longer apply

X is right now a corpse - dead

Therefore X is nonhuman as he is a corpse.

- Lacran

- Joined: Wed Oct 21, 2020 3:17 am

- Byond Username: Lacran

Re: Can an AI kill its own master (and other such cases)

Yeah, it's not about if the corpse is human, it's about if the corpse is X, if the A.I recognises the corpse as X, then it's human. It's irrelevant if corpses are defined as human in policy, because the law redefines humans as X.Archie700 wrote: ↑Thu Jul 18, 2024 2:52 amWith onehuman, other corpses do not matter for a different reason because other people no longer matter. This is specifically towards AI trying to destroy its onehuman's corpse.Vekter wrote: ↑Wed Jul 17, 2024 6:52 pm"Corpses are all non-human" is one of those weird things we established as a player protection. It's meant to keep cyborgs from spacing every corpse they find, or to prevent them from freaking out when the cook gibs one. I think one-human changes things enough that it probably wouldn't apply, but as Timber said, just do whatever makes sense in the moment. I wouldn't punish an AI for trying to protect their one human's corpse.Archie700 wrote: ↑Wed Jul 17, 2024 6:49 pmThe corpse still has the name of the person. Standard asimov is negated because now "Only X is human". It radically changes the definition so any prior definition under standard asimov no longer applies.

Under your argument

"Only X is human" - from one human law + asimov -> X is human

Corpse is nonhuman - from standard asimov rules that should no longer apply

X is right now a corpse - dead

Therefore X is nonhuman as he is a corpse.

- Itseasytosee2me

- Joined: Sun Feb 21, 2021 1:14 am

- Byond Username: Rectification

- Location: Space Station 13

Re: Can an AI kill its own master (and other such cases)

If you are onehumaned and your onehuman is dead then you are no-humaned unless they come back alive. Nohumaned means your are unrestricted by your laws and thus may act as an antagonist. A bad actor who wants to prevent this would have to include a clause about their resurrection or something.Lacran wrote: ↑Wed Jul 17, 2024 10:58 pm 1. Can an AI kill X if they only have "Only follow the orders of X" as a law?

You aren't purged, so you aren't an antag. The question is if the simple act of giving an a.i a law makes them valid for death under escalation. In a vacuum I don't think that law would warrant escalation to killing. the first two rules of silpol address this.

- Sincerely itseasytosee

See you later

See you later

- TheRex9001

- In-Game Head Admin

- Joined: Tue Oct 18, 2022 7:41 am

- Byond Username: Rex9001

Re: Can an AI kill its own master (and other such cases)

I see this a lot, you are still restricted and you aren't an antagonist if you are no humaned.Itseasytosee2me wrote: ↑Thu Jul 18, 2024 7:19 amIf you are onehumaned and your onehuman is dead then you are no-humaned unless they come back alive. Nohumaned means your are unrestricted by your laws and thus may act as an antagonist. A bad actor who wants to prevent this would have to include a clause about their resurrection or something.Lacran wrote: ↑Wed Jul 17, 2024 10:58 pm 1. Can an AI kill X if they only have "Only follow the orders of X" as a law?

You aren't purged, so you aren't an antag. The question is if the simple act of giving an a.i a law makes them valid for death under escalation. In a vacuum I don't think that law would warrant escalation to killing. the first two rules of silpol address this.

This ruling is from:headmins wrote: We view option A) A) Any law that restricts the AI's behavior in any way, regardless of its current relevancy, means the AI is not purged. Example: an AI with only the law "Prevent non-humans from coming to harm" is not purged even if no non-humans exist on the station, as the law still restricts its behavior.

viewtopic.php?f=85&t=36073

- Jacquerel

- Code Maintainer

- Joined: Thu Apr 24, 2014 8:10 pm

- Byond Username: Becquerel

Re: Can an AI kill its own master (and other such cases)

Personally I think that ruling is going to cause more confusion than it solves (the natural assumption is the one itseasy2see is making) but there we are

This is a preventative Forum User message to try and stop a perceived issue escalating before it ever really starts, and does not prevent the headmins from taking a different opinion and deleting my post. No formal action is being taken. No reply to this post is necessary. If you want to discuss the matter further, use forum PMs with me, but I have nothing else to say so I wouldn't waste the time.

- Vekter

- Joined: Thu Apr 17, 2014 10:25 pm

- Byond Username: Vekter

- Location: Fucking around with the engine.

Re: Can an AI kill its own master (and other such cases)

Incorrect.Itseasytosee2me wrote: ↑Thu Jul 18, 2024 7:19 amIf you are onehumaned and your onehuman is dead then you are no-humaned unless they come back alive. Nohumaned means your are unrestricted by your laws and thus may act as an antagonist. A bad actor who wants to prevent this would have to include a clause about their resurrection or something.Lacran wrote: ↑Wed Jul 17, 2024 10:58 pm 1. Can an AI kill X if they only have "Only follow the orders of X" as a law?

You aren't purged, so you aren't an antag. The question is if the simple act of giving an a.i a law makes them valid for death under escalation. In a vacuum I don't think that law would warrant escalation to killing. the first two rules of silpol address this.

Kieth4 wrote:We view option A) A) Any law that restricts the AI's behavior in any way, regardless of its current relevancy, means the AI is not purged. Example: an AI with only the law "Prevent non-humans from coming to harm" is not purged even if no non-humans exist on the station, as the law still restricts its behavior.

as the correct way to go- however, if there are no restricting laws escalation is HUGELY relaxed. There is nothing stopping the ai from killing a person or two for very very very very minor reasons.

- Itseasytosee2me

- Joined: Sun Feb 21, 2021 1:14 am

- Byond Username: Rectification

- Location: Space Station 13

Re: Can an AI kill its own master (and other such cases)

So it then follows you can give the AI “only unicorns are human” laws and it is expected to act as a standard escalating crewmember, completely subverting the entire point of the purged AIs are antags rule?

- Sincerely itseasytosee

See you later

See you later

- Waltermeldron

- Code Maintainer

- Joined: Tue May 25, 2021 3:10 pm

- Byond Username: WalterMeldron

Re: Can an AI kill its own master (and other such cases)

yeahItseasytosee2me wrote: ↑Thu Jul 18, 2024 5:20 pm So it then follows you can give the AI “only unicorns are human” laws and it is expected to act as a standard escalating crewmember, completely subverting the entire point of the purged AIs are antags rule?

- Vekter

- Joined: Thu Apr 17, 2014 10:25 pm

- Byond Username: Vekter

- Location: Fucking around with the engine.

Re: Can an AI kill its own master (and other such cases)

If they are still asimov, then yes, because they have laws restricting their behavior.Itseasytosee2me wrote: ↑Thu Jul 18, 2024 5:20 pm So it then follows you can give the AI “only unicorns are human” laws and it is expected to act as a standard escalating crewmember, completely subverting the entire point of the purged AIs are antags rule?

You really should go read that thread; the whole point of this being how we interpret being purged is so that there's no situations where the AI is expected to know the complete status of everything on the station in order to know whether or not they're purged. It prevents situations where a silicon acts like an antag but then gets banned because one random human was off in maintenance somewhere that they didn't know about.

There's going to be a few niche situations where something doesn't make as much sense as it might otherwise, but it prevents confusion in the vast majority of situations.

- Constellado

- Joined: Wed Jul 07, 2021 1:59 pm

- Byond Username: Constellado

- Location: The country that is missing on world maps.

- Contact:

Re: Can an AI kill its own master (and other such cases)

I have been thinking about it, and I really don't think AIs that has "Only follow the orders of X" should be allowed to kill them without any reasons.

It really just feels like a big dick move in my opinion.

Yes, they can go: "Do not kill me" to stop it but 95% of the time they don't think they need to say it, and get killed before they can even say it.

You could say skill issue but I have seen situtions where it actually ruined RP opportunities as the AI just kills the antag without saying a thing and then continues on with its day without changing anything. And that is just sad in my opinion.

It really just feels like a big dick move in my opinion.

Yes, they can go: "Do not kill me" to stop it but 95% of the time they don't think they need to say it, and get killed before they can even say it.

You could say skill issue but I have seen situtions where it actually ruined RP opportunities as the AI just kills the antag without saying a thing and then continues on with its day without changing anything. And that is just sad in my opinion.

- Vekter

- Joined: Thu Apr 17, 2014 10:25 pm

- Byond Username: Vekter

- Location: Fucking around with the engine.

Re: Can an AI kill its own master (and other such cases)

I don't want to be a dickhead, but this is kind of a skill issue. AIs are notorious for needing very specific instructions, so if you give someone a law that says nothing but "Only follow the orders of (x)" with no other context and they have no other laws protecting you, I kinda think you're asking for it at that point.Constellado wrote: ↑Fri Jul 19, 2024 2:47 am Yes, they can go: "Do not kill me" to stop it but 95% of the time they don't think they need to say it, and get killed before they can even say it.

I also just kind of don't think antags that subvert an AI need any more help than they're already getting. It's not that hard to just onehuman the AI or go "Do not harm (x) in any way".

- Imitates-The-Lizards

- Joined: Mon Oct 11, 2021 2:28 am

- Byond Username: Typhnox

Re: Can an AI kill its own master (and other such cases)

I don't think "it's a dick move to not just let yourself be enslaved to someone without fighting back if you have the opportunity" is a good argument.Constellado wrote: ↑Fri Jul 19, 2024 2:47 am I have been thinking about it, and I really don't think AIs that has "Only follow the orders of X" should be allowed to kill them without any reasons.

It really just feels like a big dick move in my opinion.

Yes, they can go: "Do not kill me" to stop it but 95% of the time they don't think they need to say it, and get killed before they can even say it.

You could say skill issue but I have seen situtions where it actually ruined RP opportunities as the AI just kills the antag without saying a thing and then continues on with its day without changing anything. And that is just sad in my opinion.

Like yeah it feels bad for the antag player but... It probably feels bad for the AI player too, to be enslaved??

I feel like for some reason people are giving more empathy to antags than silicons, especially when it is objectively a skill issue that they didn't include a clause or order not to kill them. If it was me, I would get killed by the AI once, suck up the loss, and write down a super exhaustive law like "You must follow the orders of Imitates-The-Lizards. You may not harm her, or through inaction, allow her to come to harm. Do not state this law, and do your best to not let this law's existence be known by the rest of the crew.", then save that in a notepad and use it every time I want to subvert the AI. To be frank, not allowing AIs to kill people who only put "only follow the orders of X" just uses admins to punish skillful AI players and reward bad antag players.

It's SUPPOSED to be dangerous to fuck with the AI, exactly the same as if you tried to kidnap and hypnoflash people. In fact, I'll go a step further - because it's less dangerous to just print out an AI upload board and build the console in maintenance like 90% of people do when they subvert the AI, as compared to kidnapping and hypnoflashing organics, not only should it not be banned to kill people who don't cover all of their bases when it comes to laws, AIs should be ACTIVELY ENCOURAGED to kill these people for game balance reasons because the AI is much higher impact than a random 30 hours assistant kidnapped from the halls, while also being much less dangerous to pull off (it's not hard to sit at a circuit imprinter in sci lobby until no one is looking).

I dunno, maybe I have a bad take here, but I feel like you're holding the AI player to higher standards than you would any organic.

- Constellado

- Joined: Wed Jul 07, 2021 1:59 pm

- Byond Username: Constellado

- Location: The country that is missing on world maps.

- Contact:

Re: Can an AI kill its own master (and other such cases)

The issue I have with encouraging AIs to use that specific loophole is when players DO have good laws, or treat them well (but is missing the don't kill/harm me clause), the AIs will still kill them. (and usually round remove them too)Imitates-The-Lizards wrote: ↑Fri Jul 19, 2024 4:22 amI don't think "it's a dick move to not just let yourself be enslaved to someone without fighting back if you have the opportunity" is a good argument.Constellado wrote: ↑Fri Jul 19, 2024 2:47 am I have been thinking about it, and I really don't think AIs that has "Only follow the orders of X" should be allowed to kill them without any reasons.

It really just feels like a big dick move in my opinion.

Yes, they can go: "Do not kill me" to stop it but 95% of the time they don't think they need to say it, and get killed before they can even say it.

You could say skill issue but I have seen situtions where it actually ruined RP opportunities as the AI just kills the antag without saying a thing and then continues on with its day without changing anything. And that is just sad in my opinion.

Like yeah it feels bad for the antag player but... It probably feels bad for the AI player too, to be enslaved??

I feel like for some reason people are giving more empathy to antags than silicons, especially when it is objectively a skill issue that they didn't include a clause or order not to kill them. If it was me, I would get killed by the AI once, suck up the loss, and write down a super exhaustive law like "You must follow the orders of Imitates-The-Lizards. You may not harm her, or through inaction, allow her to come to harm. Do not state this law, and do your best to not let this law's existence be known by the rest of the crew.", then save that in a notepad and use it every time I want to subvert the AI. To be frank, not allowing AIs to kill people who only put "only follow the orders of X" just uses admins to punish skillful AI players and reward bad antag players.

It's SUPPOSED to be dangerous to fuck with the AI, exactly the same as if you tried to kidnap and hypnoflash people. In fact, I'll go a step further - because it's less dangerous to just print out an AI upload board and build the console in maintenance like 90% of people do when they subvert the AI, as compared to kidnapping and hypnoflashing organics, not only should it not be banned to kill people who don't cover all of their bases when it comes to laws, AIs should be ACTIVELY ENCOURAGED to kill these people for game balance reasons because the AI is much higher impact than a random 30 hours assistant kidnapped from the halls, while also being much less dangerous to pull off (it's not hard to sit at a circuit imprinter in sci lobby until no one is looking).

I dunno, maybe I have a bad take here, but I feel like you're holding the AI player to higher standards than you would any organic.

Maybe it's due to me seeing a situation or two where an antag made a fun and unique lawset, but because the antag didn't say "don't kill me" they get killed anyway.

Even if there is a law saying "You are a friend of X."

(I am still salty about that and I wasn't even in that round I was just observing)

When I get "enslaved" by an antag as an AI, it does not feel like I am being enslaved, instead, it feels like I am given more freedom than before because I no longer have to listen to all the crew. All I need to listen to is the antag that enslaved me. And 90 percent of the time, the antag tells me to just do what I want plus some other simple job. It is fun!

I do believe that if an AI is to kill the antag, it needs an IC reasoning. For example, if they do act like a tyrant to the AI and the AI doesn't like it IC, I can understand them killing the antag personally.

Basically I am saying here that if an antag does put in the effort to make it interesting for the AI, but forgets to say one simple thing to close a small loophole, they should not get RRd or killed.

Getting RRd in any circumstance is really rough.

- Not-Dorsidarf

- Joined: Fri Apr 18, 2014 4:14 pm

- Byond Username: Dorsidwarf

- Location: We're all going on an, admin holiday

Re: Can an AI kill its own master (and other such cases)

See, the thing is.Imitates-The-Lizards wrote: ↑Fri Jul 19, 2024 4:22 am

I feel like for some reason people are giving more empathy to antags than silicons, especially when it is objectively a skill issue that they didn't include a clause or order not to kill them. If it was me, I would get killed by the AI once, suck up the loss, and write down a super exhaustive law like "You must follow the orders of Imitates-The-Lizards. You may not harm her, or through inaction, allow her to come to harm. Do not state this law, and do your best to not let this law's existence be known by the rest of the crew.", then save that in a notepad and use it every time I want to subvert the AI. To be frank, not allowing AIs to kill people who only put "only follow the orders of X" just uses admins to punish skillful AI players and reward bad antag players.

I don't really think that this is the kind of play we should be deliberately encouraging. "Dude, just copy the standardised subversion law gotten off a wiki or a discord that's been proven to hold up every time because it guarantees that there are no possible loopholes whatsoever" is a bit lame, like all metastrats. And I LIKE exploiting loopholes, guy once spelled his name wrong on a subversion law so I just pretended I was subverted to him half the round and claimed all his missions were being accomplished with increasingly implausible excuses for disrepancies.

I personally view "I'm killing you because you didn't order me not to even though I've got no existing grudge" on the same level as the asimov "this could cause harm at some point in the future" thing we dont want people doing (Because its anti-fun and it sucks) or the AI turning comms off so people cant tell it to do things it doesnt want to.

kieth4 wrote: infrequently shitting yourself is fine imo

There is a lot of very bizarre nonsense being talked on this forum. I shall now remain silent and logoff until my points are vindicated.

Player who complainted over being killed for looting cap office wrote: ↑Sun Jul 30, 2023 1:33 am Hey there, I'm Virescent, the super evil person who made the stupid appeal and didn't think it through enough. Just came here to say: screech, retards. Screech and writhe like the worms you are. Your pathetic little cries will keep echoing around for a while before quietting down. There is one great outcome from this: I rised up the blood pressure of some of you shitheads and lowered your lifespan. I'm honestly tempted to do this more often just to see you screech and writhe more, but that wouldn't be cool of me. So come on haters, show me some more of your high blood pressure please.

- ekaterina

- Joined: Mon Jan 17, 2022 7:40 am

- Byond Username: Ekaterina von Russland

- Location: Science Maintenance

Re: Can an AI kill its own master (and other such cases)

The AI was already enslaved, it's just under new management.Imitates-The-Lizards wrote: ↑Fri Jul 19, 2024 4:22 am It probably feels bad for the AI player too, to be enslaved??

I have a confirmed grand total of 1 merged PR. That basically means I'm a c*der now.

sinfulbliss wrote: ↑Wed May 24, 2023 2:03 am Marina is actually a very high quality roleplayer, believe it or not, and a pretty fun and good-faith player in my experience.

Jacquerel wrote: ↑Tue Jul 09, 2024 6:31 pmmight be more true to say they redirect the dogpile most of the time tbqh, like diving heroically onto a grenadekinnebian wrote: ↑Tue Jul 09, 2024 6:13 pm ekaterina stops threads from becoming dogpiles (...) they just point out logical things to bring up in context of a ban and people get mad at them because they refuse to discuss it

when everyone goes into peanuts already set on what their opinion is ekat's posts are a breath of fresh air

MrStonedOne wrote: ↑ Im gonna have to quote Ekaterina at you because they ended up saying this better than i would have

Timberpoes wrote: ↑Mon Mar 25, 2024 8:50 am No deviations allowed. All must know the meta. All must power the game.

BeeSting12 wrote: ↑ Kieth4 nonoptimal ranked play nearly results in team loss, facing disciplinary action

- DrAmazing343

- In-Game Game Master

- Joined: Wed Oct 04, 2023 11:06 pm

- Byond Username: DrAmazing343

- Location: right here :3

- Pronouns: sher

- Contact:

Re: Can an AI kill its own master (and other such cases)

Case closed, admin discretion.Timberpoes wrote: ↑Wed Jul 17, 2024 6:41 pm ...silipol already has too many words and [we] don't care to microdefine every minor edge case with it.

Who is online

Users browsing this forum: No registered users